A more convincing case for longtermism

Why we longtermist EAs should stick to our (idiomatic) guns when pressed, and how we can do this in practise.

I’ve noticed some EAs engage in something that appears to be a motte-and-bailey1 style argument regarding the importance of focusing on the longterm future as an EA priority.

The particular motte-and-bailey I’m referring to goes something like this:

EA: Longtermism is really important. The future of humanity faces unprecedented risk from advanced technologies like transformative artificial intelligence, and these risks are tragically neglected by others. This means, in expectation, one of the best things someone can do to improve others’ lives is focus on preventing existential risk.

Critic: This effective altruism stuff sounds like a bunch of baloney. There are literally 700 million people living in extreme poverty, and you’re worrying about improbable science fiction-sounding risks preventing some unborn people from coming into existence? How can you claim to “do the most good” if what you’re advocating for glosses over current suffering?

EA: Oh, but the EA community currently spends a lot of money on global health and has saved tons of lives with malaria nets. Effective altruism has done a lot of good, and we really place a considerable focus on global health. Why would anyone have such a problem with something that is clearly doing good things in the world?

This counterargument, at least on its own, does not help explain the motivation for longtermism in the EA community, and we should present a more substantial rebuttal. Truth-seeking should always be upheld, especially under fierce (and sometimes even bad faith) criticism.

And moreover, I think this motte-and-bailey is unnecessary, and is more of a short-hand response to our critics that doesn’t fully address the strong-form version of the argument they’re making.

Many EAs have switched to focusing on longtermism, but that’s fine

It feels essential to first make it clear that the EA community is not a homogenous blob of worldviews. Some EAs don’t care about longtermism whatsoever, focusing instead on legible ways to improve existing people’s lives, like providing vitamin A supplementation to children living in poverty. Others overwhelmingly think that the most important thing to work on is protecting future generations. Different folks mostly want animals to live happier lives. Some want all of these things.

That being said, it does feel reasonable to claim that longtermism has recently become a core focus (if not the core focus, as I argue in appendix 1) amongst many EAs, especially among those most heavily engaged. I should note that even if you disagree with me that longtermism isn’t the core focus, I still think the broader point holds that longtermism should primarily be defended by its adherents on its own merits, rather than handwaving to global health’s track record of success. But I digress.

All of this being said, having EAs focus on longtermism is... fine? EA, in a nutshell, is the practice of maximising the amount of good you do with your career, projects, and donations. According to this definition of effective altruism, there’s no reason that longtermism can’t be a perfectly reasonable thing to focus on.

To argue that EA is bad, critics either have to make the case that (1) this definition of EA is not a good thing to do or (2) it is good, but many EAs are failing to do it in practice by focusing on longtermism.

(1) seems like a difficult thing to argue — I feel that even the most staunch critics would generally agree that doing good is good, and doing even more good is gooder, all else being equal — which is why most of them instead argue (2). But in making argument (2), one has to say that future generations do not matter (or matter very little), and/or we are helpless to do anything to benefit them. While easier than arguing (1), I still think (2) isn’t an easy argument to make.

Retreating to the global health motte when pressed on the reasons for the EA community’s focus on longtermism feels like an easy way out of presenting the core reasons why protecting future generations is so important. We longtermists don’t have the concrete wins that the Against Malaria Foundation has. Still, we should stick to our guns and be transparent about why we choose to focus on protecting future generations nonetheless.

Poorly communicating the reasons for the shift in priorities

The EA community has generally done a poor job of explaining the reason behind the shift from global health to longtermism to those outside the community. I’ve seen a few arguments, albeit generally bad faith ones, claiming EAs ignore people in extreme poverty to instead focus on sci-fi-esque existential risks that might affect future people billions of years from now.2

These arguments are generally quite bad, but we should take them seriously. The original focus of EA was global health, and it is trivial to point out3 how many of the first EAs, some of whom have almost entirely shifted their focus to longtermism, took the challenge of abolishing poverty seriously. EA has made serious progress toward improving the well-being of people living in poverty, and it’s misleading to deny that.

But beckoning to the work we’ve done on global health doesn’t fully address what I imagine is the root of the argument — “oh, you supposedly care about being altruistic, but are now spending millions of dollars on things that do nothing to solve real-life problems that are happening right now?”

A stronger rebuttal, in my view, is that caring about future people says nothing about how much we care about present-day people. Indeed, it feels intuitive that the same line of thinking that caused the first EAs to care about poverty can be used to justify a newfound focus on longtermism.

We longtermists still, as Kelsey Piper once put it, want to “[level] a battering ram at global inequality”. But unfortunately, we have to triage our finite resources according to how they can do the most good. However, that doesn’t mean we have to triage what we care about as ruthlessly.

I’m disgusted to live in a world where hundreds of thousands of children die each year from a disease we know how to prevent. I’m still appalled by the absolute insanity of factory farming and the cruelty that nature imposes on wild animals. Yet my heart also aches for future generations whose livelihoods are being gambled with just as much as it does for those marginalised today. I’ve seen the harms of the present, and I worry the future could be as bad (or worse) for even more people and animals, or devoid of any value whatsoever. Just as the geographic distance between me and someone in need is a morally irrelevant factor, so is when someone in need is alive.

This motivation remains hidden when retreating to the global health motte, and I think presenting it in the open will improve the discourse considerably.

How we can do better

As Will MacAskill said, “Future people count. There could be a lot of them. We can make their lives better.” None of this means present-day people do not matter and that we don’t care about poverty. We do, and we longtermists should do a better job at making that crystal clear. Moreover, it’s worth mentioning that we can partially justify focusing on problems like AI risk and pandemic prevention on neartermist grounds by noting that preventing all of the people alive today from dying is, well, really darn important as well.

With all that being said, here is what a more convincing defence of the EA focus on longtermism might sound like:

EA: Longtermism is really important. The future of humanity faces unprecedented risk from advanced technologies like transformative artificial intelligence, and these risks are tragically neglected by others. This means, in expectation, one of the best things someone can do to improve others’ lives is focus on preventing existential risk.

Critic: This effective altruism stuff sounds like a bunch of baloney. There are literally 700 million people living in extreme poverty, and you’re worrying about improbable science fiction-sounding risks preventing some unborn people from coming into existence? How can you claim to “do the most good” if what you’re advocating for glosses over current suffering?

EA: I care about the 700 million people alive today in poverty just as much as I care about people who will be alive in the future. But my ability to help the world is constrained by the fact that I can’t work on everything at once. I therefore have to make tough calls about where I focus my time and energy. I firmly believe that:

Future people are morally significant.

There could be a lot of them if things go well.

I can make a positive difference in their lives by focusing on the biggest threats to humanity’s future.

Unfortunately, these threats are not highly unlikely and would also have profoundly bad consequences for those alive today. And because relatively few people are working to protect the future, I think that the best way for me to practise effective altruism — aka doing the most good I can with my time and resources — is by focusing on making sure the future goes well, even though I wish I could also work on reducing poverty concurrently.

Appendix 1

I base the following claim:

“Longtermism has recently become a core focus (if not the core focus) amongst many EAs right now, especially among those who are most heavily engaged”

on three pillars: (1) what the most influential EAs are focusing on, (2) data from community surveys, and (3) spending data.

Influential EAs

Let’s hear from Sam Bankman-Fried in his latest 80,000 Hours interview:

Rob Wiblin: We’ve been mostly talking about the longtermist giving. Do you want to talk for a minute about the giving focused on more near-term concerns, like global poverty and animal welfare?

Sam Bankman-Fried: Yeah. We do some amount of giving that is more short-term focused, and some of this is in connection with our partners. I think some of this is trying to set a good standard for the fields, and trying to show that there are real ways to have positive impact on the world. I think that if there’s none of that going on, it’s easy to forget that you can have real impact, that you can definitely have a strong positive impact on the world. So that’s a piece of it. But frankly, to be straightforward about it, I also think that in the end, the longtermist-oriented pieces are the most important pieces, and are the pieces we are focusing on the most.

Of course, Sam is just one EA. But he happens to be the one who owns a significant chunk of the community’s funding, and so his views on cause prioritisation are important.

Or, take it from Toby Ord in the introduction of The Precipice:

“Since there is so much work to be done to fix the needless suffering in our present, I was slow to turn to the future. It was so much less visceral; so much more abstract. Could it really be as urgent a problem as suffering now? As I reflected on the evidence and ideas that would culminate in this book, I came to realise that the risks to humanity’s future are just as real and just as urgent—yet even more neglected. And that the people of the future may be even more powerless to protect themselves from the risks we impose than the dispossessed of our own time.”

If that’s not enough, let’s hear from Will MacAskill his most recent appearance on the 80,000 Hours podcast:

“And I thought [longtermism] was crackpot. I thought this was totally crackpot at the time. In terms of my intellectual development, maybe over the course of three to six months, I moved to thinking it was non-crackpot. And then over the course of a number of years, I became intellectually bought in when I wrote Doing Good Better. So I first met Toby Ord in 2009. When I wrote Doing Good Better, I have some material on global catastrophic risks in there. I considered having much more, but decided I didn’t want to make the book too broad, too diffuse. Then it was by 2017 that I thought I just want to really pivot to focusing on these issues.”

Community survey data

Let’s also look at some data rather than simply deferring to “big name” EAs.

Rethink Priorities last ran a survey in 2020 that shows interesting results for where EAs are prioritising their attention and resources.

Global poverty was selected as the top cause that year (defined on a 5-point scale of how much of a priority a given cause area is with respect to resource distribution), narrowly beating out AI risks and climate change. However, when looking at engagement, the results change quite dramatically. As seen below, the most engaged EAs prioritise longtermist and meta cause areas more than neartermist ones.

Cause prioritisation over time demonstrates a similar effect. Interest in AI risk and other x-risks has grown substantially since 2015, and global poverty has fallen. I expect this trend to continue if/when Rethink Priorities releases a more up-to-date survey.

Spending data

Next, let’s look at where EA4 money is being spent.

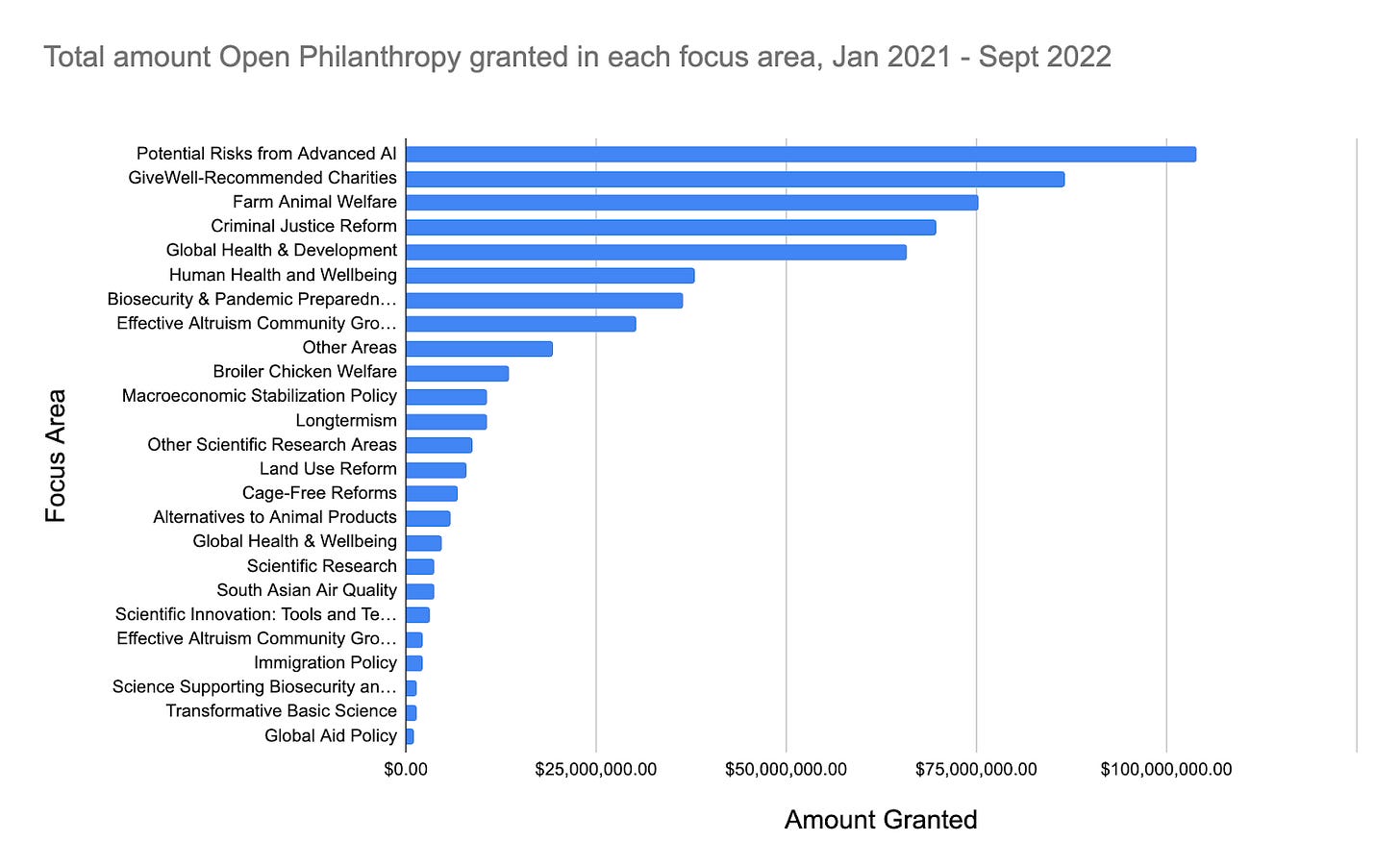

This graph from Open Philanthropy’s database of grants deployed from January 2021 to September 2022 paints a more balanced picture. Advanced AI is number one, but the following five focus areas aren’t explicitly longtermist.

What I will argue, however, is that this is more so reflective of our lack of clarity about what to spend money on concerning longtermism. Global health and animal welfare charities benefit from more explicit feedback loops and much more legible causal paths to impact. OP can funnel money into things like bed nets and be pretty darn sure that this will do a lot of good in the world.

Longtermism, however, is much murkier. We really don’t know how to readily convert cash into x-risk reductions — if OP could throw millions (billions?) at reducing AI risk with a reasonable degree of confidence that it would make a dent, they probably would.

Moreover, Open Philanthropy practices “worldview diversification”, so it seems natural to expect a more balanced focus on nearterm and longterm cause areas.

As for FTX, I’m not sure if there is any data on how much they have spent on global health, but my guess is that most of their spending is focused on longtermism.

The Canva founders have also committed billions of dollars recently with a strong focus on global poverty, and with some EA-esque framings like “doing the most good we can”. It remains to be seen how intertwined these commitments will be with the EA community directly, but this could very well cut against my argument.

Special thanks to Luke Freeman, Grace Adams, and Sjir Hoeijmakers for their feedback and thoughts on this piece.

For reference, a motte-and-bailey argument is a type of fallacy where someone advances a controversial stance, known as the “bailey”, and then switches their argument to a more easy-to-defend one, known as the “motte”, when pressed.

If only we had timelines that long. Yikes.

Hot take: donating the majority of your income to people living in poverty is a pretty strong signal that you care about the wellbeing of people living in poverty.

Organisations like the Gates Foundation certainly do things that are (lower case) ea, but I’m broadly referring to the most salient cases of explicitly EA funding in this instance.

Thanks for writing this, I also cringe when people argue that EA actually isn't focused on longtermism now when it's pretty clear ~all of the high-status EAs have moved toward it as well as the majority of highly-engaged EAs.

I might add an analogy to arguments around veganism. Anti-vegans often levy arguments like "your crops are picked by humans in bad conditions, why not fix the human suffering first before worrying about animals?" And the sensible response for a full-time vegan activist isn't to argue that many other vegans work on improving human lives, but to argue that they have limited resources which they think are best directed on the margin to reducing animal suffering. But this doesn't reduce the importance of human suffering.

I enjoyed this post and think it makes an important point. A quibble:

"Some EAs don’t care about longtermism whatsoever, focusing instead on legible ways to improve existing people’s lives, like providing vitamin A supplementation to children living in poverty. Others think global poverty is a “rounding error” and that the most important cause is protecting future generations. Others want animals to live happier lives. Some want all of these things."

This paragraph seemed a bit off, for two reasons. First, not all of these sentences are about what outcomes people do or do not want. They're about what people think should be prioritized on the margin, and so in that sense it's definitionally impossible to want "all of these things" (especially the rounding error one in combination with the other two). Secondly, and relatedly, if we instead construe the preceding sentences as being not about prioritization but as about outcomes - lower existential risk, less poverty, more animal welfare - then probably most EAs (and many cosmopolitan-minded people) do want all of those things, not just "some" of them.

(ETA: you make all of this clearer later on in the dialog, when the discussant distinguishes between "not caring" about poverty and choosing to prioritize something else)